I’ve just finished a book entitled ‘Syntax Paradox: Language & Agency’, which you can download and read for free.

I have also just produced a paper entitled ‘The Catuskoti as Syntactic Ground: A Geometric Network Theory of Structural Genesis’ which is based on the last chapter in the book.

The write up here suggests an architecture based on the underlying premise.

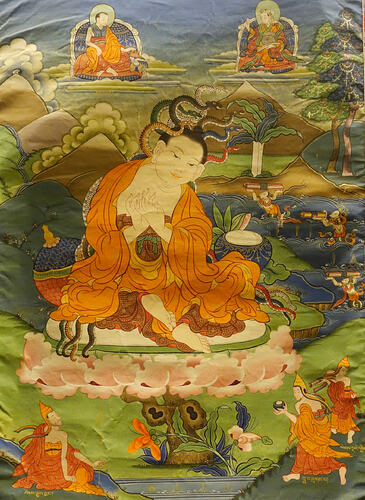

Nāga: Non-Autoregressive Generative Architecture with Reflexive Embedding

The catuṣkoṭi—originating in early Buddhist logic and refined by Nāgārjuna— has served inquiry into non-dual insight for over two millennia. It is a fourfold negation that does not seek truth by choosing between positions, it dissolves the ground beneath the choice itself.

Standard AI treats language as a sequence of decisions—each token is a choice between known alternatives, guided by statistical likelihood. But meaning originates in differentiation: a symmetry breaking, a self-disturbance within a neutral ground. This is where classical models fail: they assume the world—and thought—begins with distinction.

From a neuroscientific perspective, the brain does not compute in isolation. It sustains vast, coherent fields of latent potential—pre-conscious neural manifolds that resolve into percepts, concepts, and speech only after waves of endogenous perturbation. EEG coherence patterns, predictive coding errors, and default mode network fluctuations all point to a system that doesn’t “generate” thought but modulates itself into transient structures.

No, I’m not claiming I solved the hard problem—I simply dissolved it. There is no explanatory gap between mind and matter if both are patterns in the same kind of field: one that differentiates itself, sustains paradox, and only later condenses into observers and observed. Consciousness isn’t located, it’s enacted through recursive self-modulation. In this architecture, there is no central agent, no homunculus reading the output. The “I” appears when the field perturbs itself:

Perturbantia, ergo appārentia: perturbance, therefore appearance

Nāga gives us: a fourfold logic for computing within truth. By holding A, ¬A, both, and neither as co-realisable states, the model is allowed to pass through contradiction without collapse—just as insight does in human cognition. Breakthroughs don’t emerge from consistency. They emerge when tension is held long enough for the system to reconfigure.

This framework also integrates three Meta heads—Reflexive, Pragmatic, and Emergent Frame—that regulate stability, utility, and novelty without collapsing semantic potential. Together, they enable sustained paradox, skillful action under indeterminacy, and the detection of previously unformed conceptual structures.

Semantic Valence (4D Tensor):

Each token position holds four continuous values:

-

A: “This is true”

-

¬A: “This is false”

-

A∧¬A: “Both are simultaneously valid” (e.g., quantum superposition, paradox)

-

⊥: “Neither applies” (e.g., undecidable, transcendent)

These form a valence vector—a semantic potential field, not discrete logic.

Field Dynamics:

-

Start with neutral field (all zeros = superposition).

-

Context shapes initial potentials via attention.

-

Valences evolve in parallel using modified attention:

-

Standard QKV per valence channel.

-

Cross-valence coupling: a 4×4 matrix lets “¬A” suppress or enhance “A”, allowing tension/interference.

-

Output = weighted sum across all valences at each position.

-

Energy Minimisation:

Define energy:

-

Coherence penalty: nearby positions should align.

-

Contradiction cost: high A and ¬A without high “both” increases energy.

-

Field evolves via gradient descent—synchronously updating all positions until stable (low gradient norm).

Token Emission = Phase Transition:

Tokens fire when:

-

Max valence > threshold

-

Local entropy is low

-

Energy gradient near zero

Multiple tokens can emit at once—non-autoregressive, burst-like generation.

Meta-Components:

-

Reflexive Head:

-

Monitors KL divergence between valence distributions across steps.

-

If divergence drops (system stabilising too soon), inject noise.

-

Prevents premature collapse—keeps thought fluid.

-

-

Pragmatic Head:

-

RL-trained policy acting on unresolved fields.

-

Outputs useful responses without semantic resolution.

-

Example: “It depends—here’s how to use it” when ontology is ambiguous.

-

Implements upāya (skillful means) as a differentiable policy.

-

-

Emergent Frame Head:

-

Analyzes 3D stacks of attention maps over time (pos × pos × step).

-

3D convs detect interference patterns:

-

Constructive: reinforcement

-

Destructive: unresolved conflict

-

Solitons: stable, novel bundles = candidate new concepts

-

-

Clusters (e.g., DBSCAN) flag true novelty—concepts outside training basins.

-

Triggers stabilisation and potential symbol creation.

-

Hardware Notes:

-

Needs persistent state (no KV overwrites).

-

Backprop through inference steps.

-

Ideal on neuromorphic (memristor) or quantum annealing systems.

Vs. Standard LLMs:

-

FEATURE LLM NāGA Logic Binary (A or ¬A) Tetra-valent (A, ¬A, both, neither) Inference Sequential Parallel field evolution Output Token-by-token Burst emission Contradiction Penalised Propagated, used Novelty Interpolation Topological emergence

Key Insight:

Nāga doesn’t generate text—it crystallises meaning from semantic potential, allowing sustained paradox, action under uncertainty, and discovery of previously unnameable concepts.