After I built a custom Tokenizer, if I have more data to train, should I call the train() or train_from_iterator() again on the same saved tokenizer? will the original trained tokenizer be overwritten?

Training is based on the algorithm of the Trainer model (e.g. BPE, WordPiece), which is based on the usage of tokens in your training dataset.

If you use the add_tokens(), it ignores the algorithm and adds your specific token directly to your Tokenizer.

if I have trained a tokenizer, whether trained from an old tokenizer or from scratch, does that mean I have to use it with a trained from scratch model, in other words, the new trained tokenizer cannot tokenize for any existing models

Hello.

I’m watching the Training a new tokenizer from an old one chapter.

There is a problem using tokenizer.train_new_from_iterator according to its code.

The problem is that the memory keeps piling up, causing OOM, but I don’t think it’s a placement problem.

How do you solve it?

def get_training_corpus(dataset, batch_size):

for start_idx in tqdm(range(0, len(dataset), batch_size)):

yield dataset[start_idx:start_idx+batch_size]["text"]

training_corpus = get_training_corpus(dataset, batch_size=5000)

new_tokenizer = tokenizer.train_new_from_iterator(training_corpus, 30000)

Hi there,

def get_training_corpus():

return (

raw_datasets[“train”][i : i + 1000][“whole_func_string”]

for i in range(0, len(raw_datasets[“train”]), 1000)

)

training_corpus = get_training_corpus()

I think there’s a mistake here. A function has been written to make a generator reusable. However, as far as I know, we need to wrap the generator with a list for reuse. I think the correct way should be like this:

def get_training_corpus():

return (

raw_datasets[“train”][i : i + 1000][“whole_func_string”]

for i in range(0, len(raw_datasets[“train”]), 1000)

)

training_corpus = list(get_training_corpus())

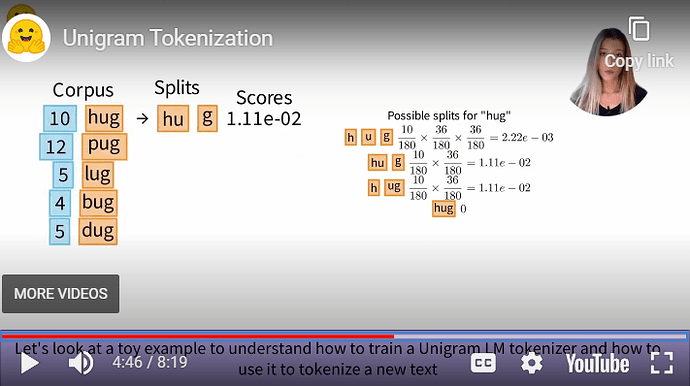

In the implementation of def encode_word(word, model) in the section Unigram tokenization - Hugging Face NLP Course, why do do we initialise the first index with score 1? {"start": 0, "score": 1}

The QuestionAnswering model tried to predict the position of start and end for answer. Let’s say, we got a paragraph with 50 words. The correct answer is between position 30 to 40. If the predicted start & end position is 25 & 10. It doesn’t make sense, because start should always less than end.

What is math definition for score? I guess it is just the probability for each potential token since the encode_word function sets the starting score as 1 for Viterbi algorithm.

Better using concise math expression instead pouring in long confusing text.

However, considering this expression score = model[token] + best_score_at_start, I believe the score should be -log(p(token)), which is contradicting to the starting condition.

ps.

The video of HF itself is also a mess about the definition of score.

Confused too. Why not 0?

I have the same question. Since the score is defined as the logarithms, the initial score should be 0.

model = {token: -log(freq / total_sum) for token, freq in token_freqs.items()}

follow Byte-Pair Encoding tokenization - Hugging Face NLP Course

I am tring to replicate result using train_new_from_iterator

old_tokenizer = AutoTokenizer.from_pretrained(‘gpt2’)

corpus = [

“This is the Hugging Face Course.”,

“This chapter is about tokenization.”,

“This section shows several tokenizer algorithms.”,

“Hopefully, you will be able to understand how they are trained and generate tokens.”,

]

tokenizer = old_tokenizer.train_new_from_iterator(corpus, vocab_size=50)

But the reuslt is totally different, and the tokenizer.vocab_size is 257 which is not 50. Why?