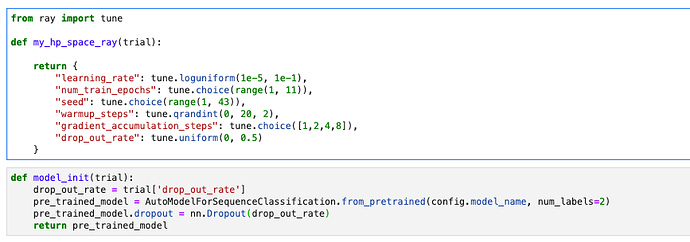

I’m trying to do hyperparameter searching for the distilBERT model on the sequence classification task. I also want to try different dropout rates. Can the trainer.hyperparameter_search do this? I tried the following code, but it is not working:

The model_init is called once at init with no trial. So you need to add a test whether trial is None or not in your model_init.

Thanks for replying.

Is there an example about using ray tune with huggingface Trainer to do hyperparameter searching for dropout rate? I’m not sure about how to set the ray tune trial object properly in the model_init.

I find lots of material about searching for learning rate, batch size and etc. None of them are related to searching for different dropout rates. The documentation said model_init together with the trial object should be able to handle this…

Late reply but this is how I got around tuning model config parameters (e.g. dropout) and training parameters (e.g. learning_rate) with optuna:

def _hp_space(trial: optuna.trial.Trial):

return {}

def _model_init(trial: optuna.trial.Trial):

if trial:

config.update(

{

'dropout': trial.suggest_float('dropout', 0.0, 0.1, log=False),

}

)

training_args.learning_rate = trial.suggest_float('learning_rate', 5e-8, 5e-6, log=True)

return AutoModelForSpeechSeq2Seq.from_pretrained(

model_args.model_name_or_path,

config=config,

revision=model_args.model_revision,

)

hpo_trainer = Seq2SeqTrainer(

model=None,

args=training_args,

train_dataset=train_ds,

eval_dataset=eval_ds,

tokenizer=feature_extractor,

data_collator=data_collator,

compute_metrics=_compute_metrics,

model_init=_model_init,

)

best_trial = hpo_trainer.hyperparameter_search(

direction='minimize',

backend='optuna',

hp_space=_hp_space,

n_trials=20,

)

Inspiration was taken from this test suite.

In case someone else is still struggling with this.