I am using hugging face library in order to fine tune recogniton for a local dialect. Before it was working very well, but since yesterday I am becoming error:

RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cpu and cuda:0! (when checking argument for argument weight in method wrapper___slow_conv2d_forward)

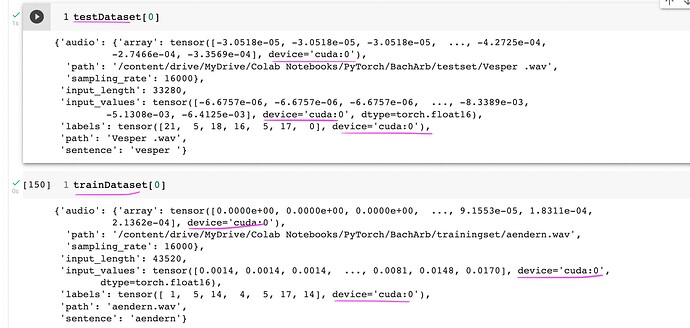

The model is downloaded from hugging face hub and I made sure, that all input data is on cuda:

From the screenshots above you may see, that all possible values are on cuda.

As a standard I also moved whole model to the cuda too:

from transformers import Wav2Vec2ForCTC

model_name = "jonatasgrosman/wav2vec2-large-xlsr-53-german"

model = Wav2Vec2ForCTC.from_pretrained(model_name).cuda()

In case of my runtime error I’d like to know what exactly is still on cpu and how possibly could I solve that problem ![]()