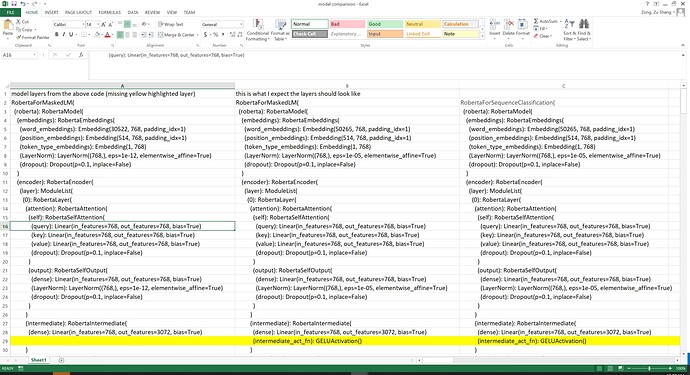

i am trying to train a masked language model from scratch. i use before code to create the roberta model architecture. but when I compare it with RobertaLM, I found it does not have the GELU activation layer. could someone help explain how to correctly do this? thanks

config = RobertaConfig(

vocab_size= 50265,

max_position_embeddings=514,

num_attention_heads=12,

num_hidden_layers=12,

type_vocab_size=1,

)

model = RobertaForMaskedLM(config=config)

the roberta layer looks like this:

------ a lot of layers…

(encoder): RobertaEncoder(

(layer): ModuleList(

(0): RobertaLayer(

(attention): RobertaAttention(

(self): RobertaSelfAttention(

(query): Linear(in_features=768, out_features=768, bias=True)

(key): Linear(in_features=768, out_features=768, bias=True)

(value): Linear(in_features=768, out_features=768, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

)

(output): RobertaSelfOutput(

(dense): Linear(in_features=768, out_features=768, bias=True)

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

(dropout): Dropout(p=0.1, inplace=False)

)

)

(intermediate): RobertaIntermediate(

(dense): Linear(in_features=768, out_features=3072, bias=True)

--------- missing gelu activation layer here

)

---------- a lot of layers…

when i load a pretrained it looks like this:

-------- a lot of layers…

(encoder): RobertaEncoder(

(layer): ModuleList(

(0): RobertaLayer(

(attention): RobertaAttention(

(self): RobertaSelfAttention(

(query): Linear(in_features=768, out_features=768, bias=True)

(key): Linear(in_features=768, out_features=768, bias=True)

(value): Linear(in_features=768, out_features=768, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

)

(output): RobertaSelfOutput(

(dense): Linear(in_features=768, out_features=768, bias=True)

(LayerNorm): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(dropout): Dropout(p=0.1, inplace=False)

)

)

(intermediate): RobertaIntermediate(

(dense): Linear(in_features=768, out_features=3072, bias=True)

(intermediate_act_fn): GELUActivation()

)

-----------a lot of layers…