I am having some confusion loading a pre-trained tokenizer of a model. I’m trying to use someone’s model from their repo, they have following saved:

- checkpoints of their fine-tuned bert uncased model

- vocabulary files (multiple), probably all generated after training a BertWordPieceTokenizer

Now, if I want to fine-tune model on my data, how do I load pre-trained tokenizer and use it on my dataset? Do I use vocabulary files or should I load my model as tokenizer as I have seen in an example here:

Please guide me. Thanks

hi @Sabs101

Please check Fine-tuning a model with the Trainer API - Hugging Face NLP Course.

The most important part should be writing tokenize_function if you need:

def tokenize_function(example):

return tokenizer(example["sentence1"], example["sentence2"], truncation=True)

We can help more if you share your initial model(probably it’s bert-base-uncased) and some lines from your dataset.

Hi @mahmutc,

Thankyou for sharing link with me, but confusion still persists. I want to know how I can load my tokenizer (pre-trained) for using it on my own datasaet, should I load it as I load the model or if vocab file is present with the model, can I do .from_pretrained(‘vocab.txt’) and load my tokenizer?

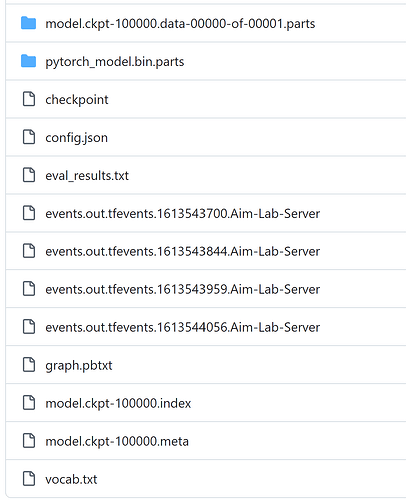

The model I am using is a fine-tuned version of original ‘bert-base-uncased’, fine-tuned for a different language. Here is what I have from the model:

Attaching a link to it as well, if it helps

Roman_Urdu_BERT/roman_urdu at master · usamakh20/Roman_Urdu_BERT · GitHub