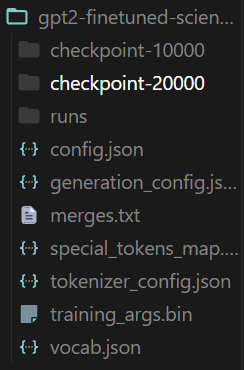

Hello folks, I am facing this issue and I don’t know how to deal with it. I finetuned gpt2 model on google colab and then installed pretrained model files locally but now when I am trying to use it locally it keeps giving me this error. The path provided is absolutely correct. Here are files in my directory.

Here is how code looks like:(I also tried using absolute path still no difference)

from transformers import GPT2LMHeadModel, GPT2Tokenizer

import requests

from googlesearch import search

# Load the fine-tuned model

fine_tuned_model = GPT2LMHeadModel.from_pretrained('gpt2-finetuned-science-20240111T135646Z-001/gpt2-finetuned-science/')

# Initialize GPT-2 tokenizer and model

tokenizer = GPT2Tokenizer.from_pretrained('gpt2-finetuned-science-20240111T135646Z-001/gpt2-finetuned-science/', pad_token='<pad>')

# Google Custom Search API configuration

API_KEY = 'AIzaSyCXjFWc4SsycKTTQHELH20_2fjsaB8n6UE'

CX = '922c9da5c6a21421d'

import spacy

from transformers import GPT2LMHeadModel, GPT2Tokenizer

def google_search(query, api_key, cx):

base_url = "https://www.googleapis.com/customsearch/v1"

params = {

'key': api_key,

'cx': cx,

'q': query,

}

response = requests.get(base_url, params=params)

results = response.json()

return results

# Load the spaCy English NLP model

nlp = spacy.load("en_core_web_sm")

# Function to extract keywords from a question

def extract_keywords(question):

doc = nlp(question)

named_entities = [ent.text for ent in doc.ents]

nouns = [token.text for token in doc if token.pos_ == "NOUN"]

keywords = named_entities + nouns

return keywords

# Function to generate response

def generate_response(prompt, max_length=50):

# Extract keywords from the prompt

keywords = extract_keywords(prompt)

# print(keywords)

input_ids = tokenizer.encode(prompt, return_tensors='pt', max_length=max_length, truncation=True)

attention_mask = input_ids.ne(tokenizer.pad_token_id).long()

output = fine_tuned_model.generate(input_ids, attention_mask=attention_mask, max_length=max_length, num_return_sequences=1)

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

# Check if the main word is not present in the generated response

# main_word = prompt.split()[0].lower()

for keyword in keywords:

if keyword.lower() not in generated_text.lower().split(" "):

#Perform Google search and use the top result

results = google_search(prompt,'AIzaSyCXjFWc4SsycKTTQHELH20_2fjsaB8n6UE','922c9da5c6a21421d')

if 'items' in results and len(results['items']) > 0:

top_result = results['items'][0]['snippet']

return f"Sorry, I don't have information on that. Here's what I found on the web: {top_result}"

return generated_text

# Example usage

user_query = "What is banach space?"

bot_response = generate_response(user_query)

print(bot_response)