I am trying to run a large DeepSeek-R1-Distill-Qwen-32B-Uncensored-Q8_0-GGUF language model (~34.8 GB) on the Hugging Face Spaces platform using an Nvidia L40S GPU (48 GB VRAM). The model successfully loads on VRAM, but an error (runtime error) occurs while attempting to initialize, after which the model starts loading again, resulting in memory exhaustion. There are no specific error messages in the logs, and the failure occurs a few minutes after initialization starts, but with no explicit indication that the wait time has been exceeded.

I need help diagnosing and solving this problem. Below I provide all the configuration details, steps taken, and application code.

Ollama? Llamacpp? Ollama seems to have model specific issue.

If you know exactly how to run it, it would be easier if you tell me about it )

I’m sorry… If I knew, I would tell you straight away, but I haven’t succeeded in building in the Hugging Face GPU Gradio space with Llamacpp-python 0.3.5 or later either. DeepSeek should require at least 0.3.5 or 0.3.6. Ollama is not available because it is not in the system to begin with. Perhaps available in the Docker space…?

Works but old

https://github.com/abetlen/llama-cpp-python/releases/download/v0.3.4-cu124/llama_cpp_python-0.3.4-cp310-cp310-linux_x86_64.whl

Doesn’t work (or rather, works in CPU mode…)

--extra-index-url https://abetlen.github.io/llama-cpp-python/whl/cu121

llama-cpp-python

It can’t use GGUF, but I’ll leave the code I made for the Zero GPU space using Transformers and BnB. This should make the model usable. I hope Llamacpp-python will be available soon…

huge respect )) i have been trying for 5 days to get it up and running and no way, but it’s already working thanks!

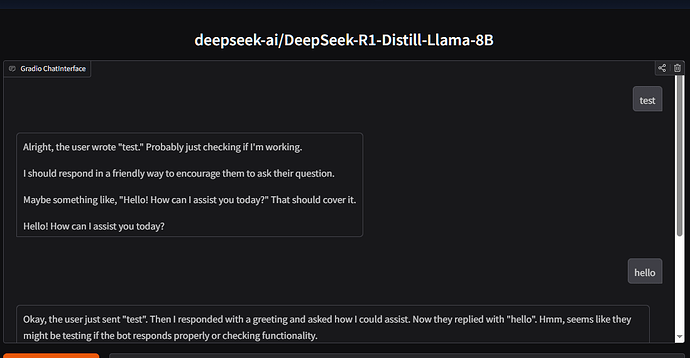

I got excited early, I responded to a “hi” message normally once, the rest of the time it responds to me with my message and that’s it. But what’s already running is progress, I’ll look into it further.

===== Application Startup at 2025-03-14 18:08:23 =====

Could not load bitsandbytes native library: /usr/lib/x86_64-linux-gnu/libstdc++.so.6: version GLIBCXX_3.4.32' not found (required by /usr/local/lib/python3.10/site-packages/bitsandbytes/libbitsandbytes_cpu.so) Traceback (most recent call last): File "/usr/local/lib/python3.10/site-packages/bitsandbytes/cextension.py", line 85, in <module> lib = get_native_library() File "/usr/local/lib/python3.10/site-packages/bitsandbytes/cextension.py", line 72, in get_native_library dll = ct.cdll.LoadLibrary(str(binary_path)) File "/usr/local/lib/python3.10/ctypes/__init__.py", line 452, in LoadLibrary return self._dlltype(name) File "/usr/local/lib/python3.10/ctypes/__init__.py", line 374, in __init__ self._handle = _dlopen(self._name, mode) OSError: /usr/lib/x86_64-linux-gnu/libstdc++.so.6: version GLIBCXX_3.4.32’ not found (required by /usr/local/lib/python3.10/site-packages/bitsandbytes/libbitsandbytes_cpu.so)

↑ Those bitsandbytes warnings are expected on ZeroGPU ↑

GLIBCXX_3.4.32' not found

Don’t worry about what this message means. It’s just something like that.

By the way, it was buggy, so I fixed it.![]()

Out of 10 times, 1 time he responds normally to “hello”, but he can’t do anything more complicated than that, so I’m still looking for a solution.

I think I probably made a mistake somewhere. I’ll check it tomorrow.

thank you ![]()

Maybe fixed.

Unfortunately no, I tried to disable quantization but then the model does not fit in memory, I tried to increase quantization to 8 bits, but it did not change significantly

I tried adding a system promt, but it doesn’t affect the result either.

That’s strange… I wonder if it’s different from the model I’m using for testing…

I’m testing it again now. BTW, that’s normal for quantization-related things. I quantized it because I didn’t have enough VRAM.

Yes, I saw in the code that you applied quantization to 4 bits, and I’m trying a different model now, I’ll report back soon.

I can not find in search Original Model: DeepSeek-R1-Distill-Qwen-32B-Uncensored I see only versions after quantization of this model, but there is no original file. or it is not available on huggingface and should be taken elsewhere ?

This one. nicoboss/DeepSeek-R1-Distill-Qwen-32B-Uncensored · Hugging Face

I’ve figured out the cause, but it’s a problem with the VRAM. The standard Transformers cache implementation is easy to use, but it eats up VRAM…

I think I’ll try to implement a better version tomorrow.

For now, I’ve uploaded a version that doesn’t remember the conversation history, but there are no problems with the operation.

I’m running using

Nvidia 1x L40S

vCPU: 8

RAM (RAM): ~62GB

VRAM (GPU memory): 48 GB

and the model responds much faster, and always responds to the first message, but it is not stable and after the first message it hangs and does not respond to the next messages.