Hey friends ![]()

I started by just helping a few devs troubleshoot weird RAG issues on forums.

Chunking bugs. Missing context. FAISS pulling totally unrelated documents.

At first, I thought: “Easy fix. Probably bad chunking or wrong embedding.”

But then I saw it happen again. And again. And again.

Not just random edge cases — core architectural flaws.

So instead of replying one by one, I wrote a full semantic theory + open-source tool to fix it.

Here’s the fire, and here’s my extinguisher:

What’s Actually Breaking in RAG

What’s Actually Breaking in RAG

1. Token-based chunking kills meaning.

Chunking by character or token count sounds good — until your sentence gets sliced mid-thought and the embedding captures noise instead of intent.

2. Retrieval is semantically blind.

Cosine similarity ≠ semantic relevance. You get matches that look similar vector-wise, but are irrelevant in context.

3. System prompts can’t fight bad retrieval.

Once junk gets injected into the LLM context, it’s too late. You hallucinate confidently… with citations.

My Solution: WFGY Engine (Semantic Firewall)

My Solution: WFGY Engine (Semantic Firewall)

I built the WFGY architecture — think of it as a semantic meaning firewall for your RAG pipeline.

Components:

Components:

ΔS(Delta Semantic Drift): Quantifies how much semantic distortion a chunk has from the original query.λ_observe: Measures the internal meaning-focus of retrieved chunks. Think: coherence entropy.- Semantic-Aware Chunking: Splits not by token count but by concept boundary.

- Layered Retrieval Filtering: Filters embeddings based on ΔS thresholds before hitting the LLM.

- Prompt Firewall Injection: Dynamically rewrites prompt instructions to guard hallucination-prone flows.

Visual Architecture (Click to see full PDF):

Visual Architecture (Click to see full PDF):

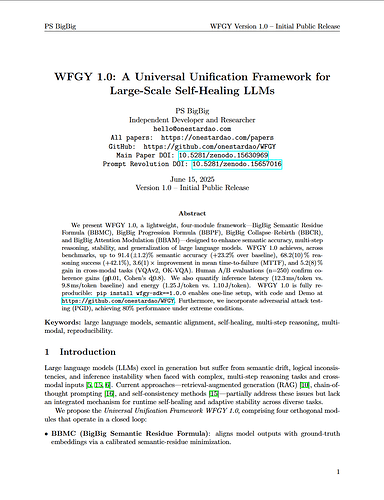

![]() WFGY PDF: Full Paper + Math

WFGY PDF: Full Paper + Math

![]() Endorsed by Tesseract.js creator (36k GitHub stars)

Endorsed by Tesseract.js creator (36k GitHub stars)

![]() 2,000+ downloads in 1 month

2,000+ downloads in 1 month

Example Use Case:

Example Use Case:

Before:

“What’s the warranty policy on X?”

→ Retrieves FAQ chunk with unrelated refund info

→ Hallucinated answer: “X has a 7-day warranty with cash payout option”

After WFGY:

Same question → ΔS rejects refund chunk

→ Only warranty paragraph passes

→ Accurate response with fallback confidence score

Closing Drunk Thoughts

Closing Drunk Thoughts

I thought this was going to be a one-off “just fix your chunk size” thing.

Turns out it’s a semantic alignment problem across the entire pipeline.

If you’re building with RAG and feel like you’re duct-taping your way out of a tornado — yeah, I feel you. Let’s stop hallucinating and start firewalling. Drop a comment if you’re in the same hole.

And if your LLM still hallucinates after this, maybe it’s just drunk. ![]()