Hi,

in the notebook 06_sagemaker_metrics / sagemaker-notebook.ipynb, there is the code to get training and eval metrics at the end of the training from the HuggingFaceEstimator.

How we can get them DURING the training?

Great, but I don’t understand how we can get them DURING the training to check how good (or not) the training is (for example, to detect overfitting and then, stop training before the last epoch).

My idea was to create a duplicate notebook (without running fit() in this duplicated one) for that purpose. The following text in the notebook seems to say that it is possible but how can we get specifiying the exact training job name in the TrainingJobAnalytics API call? Thanks.

Note that you can also copy this code and run it from a different place (as long as connected to the cloud and authorized to use the API), by specifiying the exact training job name in the

TrainingJobAnalyticsAPI call.)

Problem: “Warning: No metrics called eval_loss found”

I have a second question.

I used the metrics code (copy/paste) from 06_sagemaker_metrics / sagemaker-notebook.ipynb in a NER finetuning notebook on AWS SageMaker.

The code of my NER notebook uses directly the script run_ner.py from github (through the argument git_config in my Hugging Face Estimator).

metric_definitions=[

{'Name': 'loss', 'Regex': "'loss': ([0-9]+(.|e\-)[0-9]+),?"},

{'Name': 'learning_rate', 'Regex': "'learning_rate': ([0-9]+(.|e\-)[0-9]+),?"},

{'Name': 'eval_loss', 'Regex': "'eval_loss': ([0-9]+(.|e\-)[0-9]+),?"},

{'Name': 'eval_accuracy', 'Regex': "'eval_accuracy': ([0-9]+(.|e\-)[0-9]+),?"},

{'Name': 'eval_f1', 'Regex': "'eval_f1': ([0-9]+(.|e\-)[0-9]+),?"},

{'Name': 'eval_precision', 'Regex': "'eval_precision': ([0-9]+(.|e\-)[0-9]+),?"},

{'Name': 'eval_recall', 'Regex': "'eval_recall': ([0-9]+(.|e\-)[0-9]+),?"},

{'Name': 'eval_runtime', 'Regex': "'eval_runtime': ([0-9]+(.|e\-)[0-9]+),?"},

{'Name': 'eval_samples_per_second', 'Regex': "'eval_samples_per_second': ([0-9]+(.|e\-)[0-9]+),?"},

{'Name': 'epoch', 'Regex': "'epoch': ([0-9]+(.|e\-)[0-9]+),?"}]

git_config = {'repo': 'https://github.com/huggingface/transformers.git','branch': 'v4.12.3'}

huggingface_estimator = HuggingFace(

entry_point = 'run_ner.py',

source_dir = './examples/pytorch/token-classification',

git_config = git_config,

(...),

metric_definitions = metric_definitions,

)

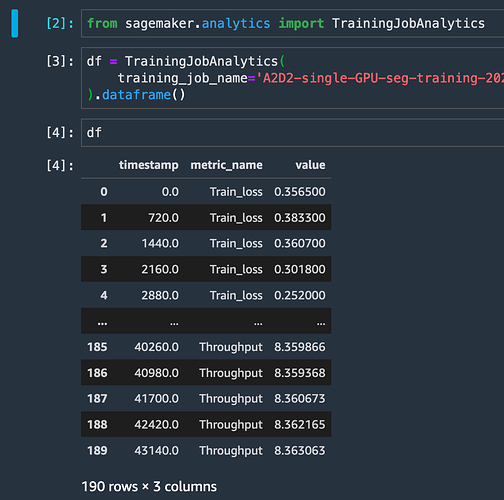

I have no problem of training but when I want to display the metrics, most of them were not found (see the following screen shot):

I compared the code relative to the logs in the 2 scripts and they are different.

In the train.py:

# Set up logging

logger = logging.getLogger(__name__)

logging.basicConfig(

level=logging.getLevelName("INFO"),

handlers=[logging.StreamHandler(sys.stdout)],

format="%(asctime)s - %(name)s - %(levelname)s - %(message)s",

)

In the run_ner.py:

logger = logging.getLogger(__name__)

# Setup logging

logging.basicConfig(

format="%(asctime)s - %(levelname)s - %(name)s - %(message)s",

datefmt="%m/%d/%Y %H:%M:%S",

handlers=[logging.StreamHandler(sys.stdout)],

)

This is the reason of the problem?