Hi,

I’m using Wav2Vec2ForCTC.from_pretrained(“facebook/wav2vec2-large-xlsr-53”) to fine-tune on the Lithuanian language dataset. I’ve limited the dataset to 100 hours of records in the range of 1 to 15 seconds.

I’m following the example from this notebook: Fine-Tune Wav2Vec2 for English ASR in Hugging Face with ![]() Transformers by @patrickvonplaten.

Transformers by @patrickvonplaten.

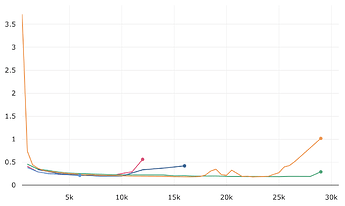

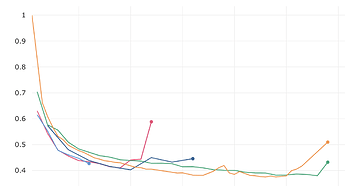

My issue is that that the training loss and validation loss steadily decrease first few epochs and then all metrics start to worsen.

Eval loss

Wer

Train loss (although not so clearly visible)

My configuration is:

model = Wav2Vec2ForCTC.from_pretrained(

"facebook/wav2vec2-large-xlsr-53",

activation_dropout=0.055,

attention_dropout=0.094,

hidden_dropout=0.047,

feat_proj_dropout=0.04,

mask_time_prob=0.082,

layerdrop=0.041,

gradient_checkpointing=True,

ctc_loss_reduction="mean",

pad_token_id=processor.tokenizer.pad_token_id,

vocab_size=len(processor.tokenizer),

)

model.freeze_feature_extractor()

training_args = TrainingArguments(

output_dir="/workspace/models/wav2vec-lt",

group_by_length=True,

per_device_train_batch_size=24,

gradient_accumulation_steps=2,

evaluation_strategy="steps",

num_train_epochs=30,

fp16=True,

save_steps=1000,

eval_steps=1000,

logging_steps=1000,

learning_rate=2.34e-4,

warmup_steps=500,

save_total_limit=20,

load_best_model_at_end=True,

greater_is_better=False,

log_level='debug',

dataloader_num_workers=6,

metric_for_best_model="wer",

)

trainer = Trainer(

model=model,

data_collator=data_collator,

args=training_args,

compute_metrics=compute_metrics,

train_dataset=dataset_prepared['train'],

eval_dataset=dataset_prepared['valid'],

tokenizer=processor.feature_extractor,

callbacks=[EarlyStoppingCallback(early_stopping_patience=5, early_stopping_threshold=0.0001)],

)

Model params were taken from another wav2vec finetuning example.

At first, I had a higher learning rate, later reduced to the current one.

I was thinking of using HPT to get the params to get the best results, but I’d like to resolve this problem first.

Any advice?