Hi! I am trying to create my own dataset for image classification and use the following code:

dataset = load_dataset("imagefolder", data_dir="Vials_all_cases")

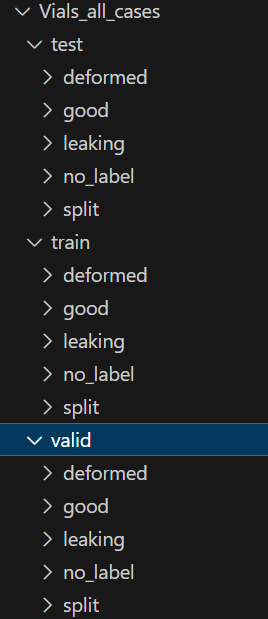

The structure of folders is the following:

And first I got this:

Generating train split: 0 examples [00:00, ? examples/s]

And then I get an error:

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

File c:\Projects\Vials_Transformers\.venv\lib\site-packages\datasets\builder.py:1646, in GeneratorBasedBuilder._prepare_split_single(self, gen_kwargs, fpath, file_format, max_shard_size, split_info, check_duplicate_keys, job_id)

1637 writer = writer_class(

1638 features=writer._features,

1639 path=fpath.replace("SSSSS", f"{shard_id:05d}").replace("JJJJJ", f"{job_id:05d}"),

(...)

1644 embed_local_files=embed_local_files,

1645 )

-> 1646 example = self.info.features.encode_example(record) if self.info.features is not None else record

1647 writer.write(example, key)

File c:\Projects\Vials_Transformers\.venv\lib\site-packages\datasets\features\features.py:1851, in Features.encode_example(self, example)

1850 example = cast_to_python_objects(example)

-> 1851 return encode_nested_example(self, example)

File c:\Projects\Vials_Transformers\.venv\lib\site-packages\datasets\features\features.py:1229, in encode_nested_example(schema, obj, level)

1227 raise ValueError("Got None but expected a dictionary instead")

1228 return (

-> 1229 {

1230 k: encode_nested_example(sub_schema, sub_obj, level=level + 1)

1231 for k, (sub_schema, sub_obj) in zip_dict(schema, obj)

1232 }

1233 if obj is not None

1234 else None

...

1664 e = e.__context__

-> 1665 raise DatasetGenerationError("An error occurred while generating the dataset") from e

1667 yield job_id, True, (total_num_examples, total_num_bytes, writer._features, num_shards, shard_lengths)

DatasetGenerationError: An error occurred while generating the dataset