Hello everyone,

wish you to be in good health!

I am facing an issue for training the maskformer on custom dataset.

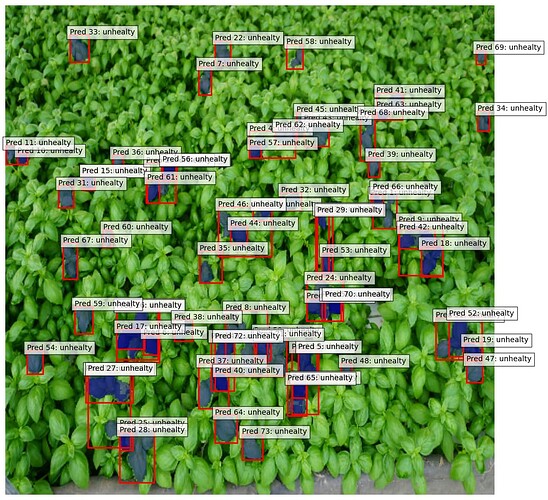

my data has 6 classes, it is agricultural dataset. For pepper

I process the data as follows, firstly I created the masks using these lines

def __getitem__(self, idx):

# Get image info

image_info = self.images[idx]

image_id = image_info['id']

width, height = image_info['width'], image_info['height']

# Load the image

relative_path = image_info['path'].lstrip('/datasets/') # Remove '/datasets/' prefix

image_path = os.path.join(self.root_dir, relative_path)

image = Image.open(image_path).convert("RGB")

if self.transform:

image = self.transform(image)

# Get annotations for the image

annotations = self.image_id_to_annotations.get(image_id, [])

segmentations = [anno.get('segmentation', []) for anno in annotations]

category_ids = [anno['category_id'] for anno in annotations]

# Create the semantic map

semantic_map = np.zeros((height, width), dtype=np.uint8)

for seg, category_id in zip(segmentations, category_ids):

if category_id == 11: # Skip category 11

continue

for polygon in seg:

polygon = np.array(polygon).reshape(-1, 2)

rr, cc = skimage.draw.polygon(polygon[:, 1], polygon[:, 0], semantic_map.shape)

semantic_map[rr, cc] = category_id

semantic_map_tensor = torch.tensor(semantic_map, dtype=torch.uint8)

return {

'image': image,

'semantic_map': semantic_map_tensor,

'image_id': image_id,

'width': width,

'height': height,

}

then to do the id2label I used this

"Needs to be checked later, date 13/01/2025, line: 193 new, and line 194 old"

# id2label = {10:"background", 11: "pepper_kp", 12: "pepper red", 13: "pepper yellow", 14: "pepper green", 15: "pepper mixed", 17: "pepper mixed_red", 18: "pepper mixed_yellow"}

id2label = {11:'background', 12: "pepper red", 13: "pepper yellow", 14: "pepper green", 15: "pepper mixed", 17: "pepper mixed_red", 18: "pepper mixed_yellow"}

# Remap to contiguous IDs

label2id = {old_id: new_id for new_id, old_id in enumerate(sorted(id2label.keys()))}

id2label_remapped = {label2id[old_id]: label for old_id, label in id2label.items()}

id2color = {

0: '#000000',

1: '#c7211c',

2: '#fff700',

3: '#00ff00',

4: '#e100ff',

5: '#ff6600',

6: '#d1c415',

}

palette = [

tuple(int(id2color[id].lstrip('#')[i:i+2], 16) for i in (0, 2, 4)) if id in id2color else (0, 0, 0)

for id in range(len(id2label_remapped))

]

palette = np.array(palette, dtype=np.uint8)

print(palette)

from transformers import MaskFormerImageProcessor

# Create a preprocessor

preprocessor = MaskFormerImageProcessor(ignore_index = 0, reduce_labels=False, do_resize=False, do_rescale=False, do_normalize=False)

is this the correct way to preprocess the inputs before fine tuning?