I decided to use do_reduce_labels=True together with ignore_index=255, following this discussion about a similar case:

Additionally, I found a similar scenario in a tutorial for fine-tuning Mask2Former, where the config.json file also has do_reduce_labels=True. According to the documentation, the preprocessing for MaskFormer and Mask2Former should be identical.

In the same file:

# We need to specify the label2id mapping for the model

# it is a mapping from semantic class name to class index.

# In case your dataset does not provide it, you can create it manually:

# label2id = {"background": 0, "cat": 1, "dog": 2}

label2id = dataset["train"][0]["semantic_class_to_id"]

if args.do_reduce_labels:

label2id = {name: idx for name, idx in label2id.items() if idx != 0} # remove background class

label2id = {name: idx - 1 for name, idx in label2id.items()} # shift class indices by -1

From what I gather (though I’m not entirely sure, as the documentation isn’t very clear), when you don’t want to consider the background as a segmentable class, the preprocessor replaces the background in the image with the value 255. This value is ignored during loss computation.

Thus, I set the parameter as follows. I don’t think the ignore_index value can be arbitrarily set (e.g., if I have {0: 'garden', 1: 'car', 2: 'tree'} and set ignore_index=1, the ‘car’ class will be ignored during loss computation).

The parameter do_reduce_labels=True ensures that classes start from 0 and increment upward, which is why they are shifted by -1.

Example (Models trained with 20 epochs and learning rate 5e-5)

Test Image:

Preprocessor for MaskFormer:

self.processor = AutoImageProcessor.from_pretrained(

"facebook/maskformer-swin-small-coco",

do_reduce_labels=True,

reduce_labels=True,

ignore_index=255,

do_resize=False,

do_rescale=False,

do_normalize=False,

)

Results with MaskFormer:

───────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Test metric DataLoader 0

───────────────────────────────────────────────────────────────────────────────────────────────────────────────────

test_loss 1.0081120729446411

test_map 0.038004860281944275

test_map_50 0.06367719173431396

test_map_75 0.040859635919332504

test_map_large 0.5004204511642456

test_map_medium 0.04175732284784317

test_map_small 0.007470746990293264

test_mar_1 0.01011560671031475

test_mar_10 0.05838150158524513

test_mar_100 0.06329479813575745

───────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Test Image Result with MaskFormer:

Preprocessor for Mask2Former:

self.id2label = {0: "unhealty"}

self.label2id = {v: int(k) for k, v in self.id2label.items()}

self.processor = AutoImageProcessor.from_pretrained(

"facebook/mask2former-swin-small-coco-instance",

do_reduce_labels=True,

reduce_labels=True,

ignore_index=255,

do_resize=False,

do_rescale=False,

do_normalize=False,

)

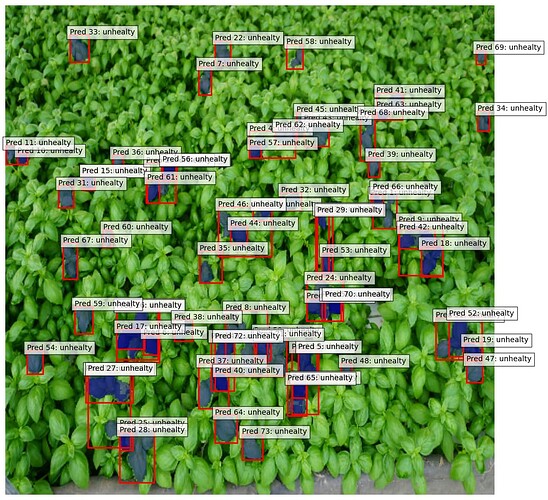

Results with Mask2Former:

───────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Test metric DataLoader 0

───────────────────────────────────────────────────────────────────────────────────────────────────────────────────

test_loss 15.374979972839355

test_map 0.44928184151649475

test_map_50 0.6224347949028015

test_map_75 0.5011898279190063

test_map_large 0.8390558958053589

test_map_medium 0.6270320415496826

test_map_small 0.32075226306915283

test_mar_1 0.03526011481881142

test_mar_10 0.24104046821594238

test_mar_100 0.5274566411972046

───────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Test Image Result with Mask2Former:

As you can see, the results are very different, even though the code is identical, except for the parts where the model type is changed. if you want I can share the code.