HI ALL , BELOW IS MY CODE:

from langchain.llms import HuggingFaceHub

from langchain import PromptTemplate, LLMChain

repo_id=“mistralai/Mistral-7B-Instruct-v0.2”

response=llm.invoke(“what is the capital of USA”)

print(response)

ERROR BELOW:

1 Like

Ongoing issue.

opened 04:58PM - 06 May 25 UTC

bug

### Describe the bug

The InferenceClient API still does not support many of the… tasks that can be hosted at inference endpoints, but gives a deprecation warning when using `.post` to get around this.

### Reproduction

```python

from huggingface_hub import InferenceClient, get_inference_endpoint

import json

# Get endpoint and create client

MODEL_NAME = "YOUR_MODEL_NAME_OR_ENDPOINT_NAME"

NAMESPACE = "YOUR_NAMESPACE"

endpoint = get_inference_endpoint(MODEL_NAME, namespace=NAMESPACE)

client = InferenceClient(endpoint.url, timeout=10)

# Test data

query = "What is the capital of France?"

document = "Paris is the capital of France."

sentence_ranking_style_inputs = [[query, document]]

text_classification_style_inputs = [{"text": query, "text_pair": document}]

# 1. Using post method

response_bytes = client.post(json={"inputs": sentence_ranking_style_inputs})

print(json.loads(response_bytes))

# Problem: post method has deprecation warning

# 2. Using text_classification task

try:

result = client.text_classification(text_classification_style_inputs) # There's no way to inject the inputs format that would have worked on this task for reranking

print(result)

except Exception as e:

print(f"text_classification error: {e}")

# Problem: text_classification doesn't properly support text pairs format needed for reranking/cross-encoding

# 3. Using sentence_similarity task

try:

result = client.sentence_similarity(sentence_ranking_style_inputs) # There's no direct way to inject the inputs format that would have worked on this task for reranking

print(result)

except Exception as e:

print(f"sentence_similarity error: {e}")

# Problem: No direct support for sentence ranking despite endpoint supporting this task

```

Ideally, the sentence_ranking task is supported.

### Logs

```shell

```

### System info

```shell

- huggingface_hub version: 0.30.2

- Platform: macOS-15.4-arm64-arm-64bit

- Python version: 3.12.10

- Running in iPython ?: No

- Running in notebook ?: No

- Running in Google Colab ?: No

- Running in Google Colab Enterprise ?: No

- Token path ?: redacted

- Has saved token ?: False

- Configured git credential helpers: redacted

- FastAI: N/A

- Tensorflow: N/A

- Torch: 2.7.0

- Jinja2: 3.1.6

- Graphviz: N/A

- keras: N/A

- Pydot: N/A

- Pillow: 11.2.1

- hf_transfer: N/A

- gradio: N/A

- tensorboard: N/A

- numpy: 1.26.4

- pydantic: 2.11.4

- aiohttp: 3.11.18

- hf_xet: N/A

- ENDPOINT: https://huggingface.co

- HF_HUB_CACHE: redacted

- HF_ASSETS_CACHE: redacted

- HF_TOKEN_PATH: redacted

- HF_STORED_TOKENS_PATH: redacted

- HF_HUB_OFFLINE: False

- HF_HUB_DISABLE_TELEMETRY: False

- HF_HUB_DISABLE_PROGRESS_BARS: None

- HF_HUB_DISABLE_SYMLINKS_WARNING: False

- HF_HUB_DISABLE_EXPERIMENTAL_WARNING: False

- HF_HUB_DISABLE_IMPLICIT_TOKEN: False

- HF_HUB_ENABLE_HF_TRANSFER: False

- HF_HUB_ETAG_TIMEOUT: 10

- HF_HUB_DOWNLOAD_TIMEOUT: 10

```

Solution for SentenceTransformers:

ANY SOLUTION GUYS ??

KINDLY PLEASE PROVIDE THE SOLUTION

1 Like

Hmm… It seems that this cannot be resolved with a user patch…

STILL NO SOLUTION OVER THIS ???

KINDLY RESOLVE: AttributeError: ‘InferenceClient’ object has no attribute ‘post’

3 Likes

Seems WIP?

main ← copilot/fix-3055

opened 05:55PM - 23 May 25 UTC

Thanks for assigning this issue to me. I'm starting to work on it and will keep … this PR's description up to date as I form a plan and make progress.

Original issue description:

> ### Describe the bug

>

> The InferenceClient API still does not support many of the tasks that can be hosted at inference endpoints, but gives a deprecation warning when using `.post` to get around this.

>

> ### Reproduction

>

> ```python

> from huggingface_hub import InferenceClient, get_inference_endpoint

> import json

>

> # Get endpoint and create client

> MODEL_NAME = "YOUR_MODEL_NAME_OR_ENDPOINT_NAME"

> NAMESPACE = "YOUR_NAMESPACE"

> endpoint = get_inference_endpoint(MODEL_NAME, namespace=NAMESPACE)

> client = InferenceClient(endpoint.url, timeout=10)

>

> # Test data

> query = "What is the capital of France?"

> document = "Paris is the capital of France."

> sentence_ranking_style_inputs = [[query, document]]

> text_classification_style_inputs = [{"text": query, "text_pair": document}]

>

> # 1. Using post method

> response_bytes = client.post(json={"inputs": sentence_ranking_style_inputs})

> print(json.loads(response_bytes))

> # Problem: post method has deprecation warning

>

> # 2. Using text_classification task

> try:

> result = client.text_classification(text_classification_style_inputs) # There's no way to inject the inputs format that would have worked on this task for reranking

> print(result)

> except Exception as e:

> print(f"text_classification error: {e}")

> # Problem: text_classification doesn't properly support text pairs format needed for reranking/cross-encoding

>

> # 3. Using sentence_similarity task

> try:

> result = client.sentence_similarity(sentence_ranking_style_inputs) # There's no direct way to inject the inputs format that would have worked on this task for reranking

> print(result)

> except Exception as e:

> print(f"sentence_similarity error: {e}")

> # Problem: No direct support for sentence ranking despite endpoint supporting this task

> ```

>

> Ideally, the sentence_ranking task is supported.

>

> ### Logs

>

> ```shell

>

> ```

>

> ### System info

>

> ```shell

> - huggingface_hub version: 0.30.2

> - Platform: macOS-15.4-arm64-arm-64bit

> - Python version: 3.12.10

> - Running in iPython ?: No

> - Running in notebook ?: No

> - Running in Google Colab ?: No

> - Running in Google Colab Enterprise ?: No

> - Token path ?: redacted

> - Has saved token ?: False

> - Configured git credential helpers: redacted

> - FastAI: N/A

> - Tensorflow: N/A

> - Torch: 2.7.0

> - Jinja2: 3.1.6

> - Graphviz: N/A

> - keras: N/A

> - Pydot: N/A

> - Pillow: 11.2.1

> - hf_transfer: N/A

> - gradio: N/A

> - tensorboard: N/A

> - numpy: 1.26.4

> - pydantic: 2.11.4

> - aiohttp: 3.11.18

> - hf_xet: N/A

> - ENDPOINT: https://huggingface.co

> - HF_HUB_CACHE: redacted

> - HF_ASSETS_CACHE: redacted

> - HF_TOKEN_PATH: redacted

> - HF_STORED_TOKENS_PATH: redacted

> - HF_HUB_OFFLINE: False

> - HF_HUB_DISABLE_TELEMETRY: False

> - HF_HUB_DISABLE_PROGRESS_BARS: None

> - HF_HUB_DISABLE_SYMLINKS_WARNING: False

> - HF_HUB_DISABLE_EXPERIMENTAL_WARNING: False

> - HF_HUB_DISABLE_IMPLICIT_TOKEN: False

> - HF_HUB_ENABLE_HF_TRANSFER: False

> - HF_HUB_ETAG_TIMEOUT: 10

> - HF_HUB_DOWNLOAD_TIMEOUT: 10

> ```

Fixes #3055.

---

💡 You can make Copilot smarter by setting up custom instructions, customizing its development environment and configuring Model Context Protocol (MCP) servers. Learn more [Copilot coding agent tips](https://gh.io/copilot-coding-agent-tips) in the docs.

It may be possible to fix this on the langchain side, but I don’t think it has been done yet.

🦜🔗 Build context-aware reasoning applications

You’re using InferenceClient which doesn’t have a .post() method. LangChain expects HuggingFaceHub for this to work.

Swap it to:

from langchain.llms import HuggingFaceHub

That’ll stop the .post() error.

Solution provided by Triskel Data Deterministic AI

1 Like

your solution is NOT WORKING

1 Like

Dear Respected Brother, I never degenerated your solution… When I ran your code on colab, its showing Bugs or error…

Good day bro

2 Likes

I also wasted lot of time for this… I wastrying a lot and found everyone is struggling with the same issue.

1 Like

Me too going with the same error

llm=HuggingFaceHub(repo_id=“mistralai/Mistral-7B-Instruct-v0.2”)

prompt = PromptTemplate(

chain= LLMChain(prompt=prompt,llm=llm,verbose=True)

2 Likes

Can anyone help me with this issue?

1 Like

Use below code: This will resolve and you can prompt:

repo_id=“mistralai/Mistral-7B-Instruct-v0.3”

llm = HuggingFaceEndpoint(

print(llm.invoke("write a poem on deep learning , for children age 10 "))

1 Like

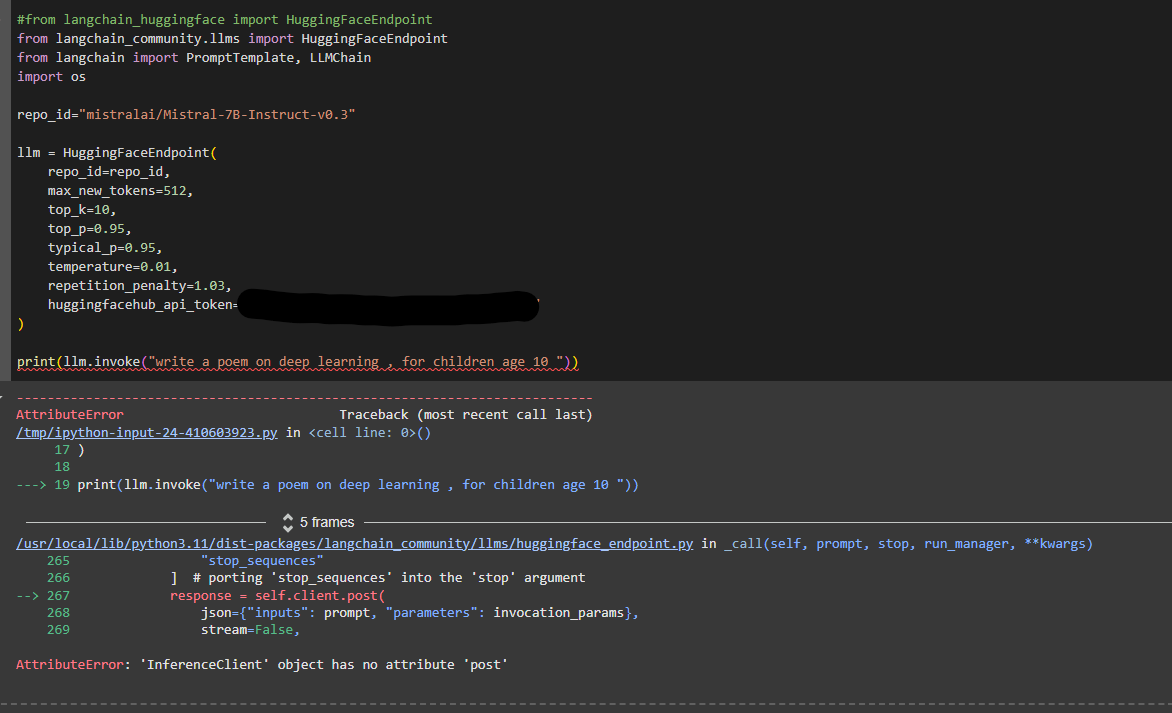

There is an issue with HuggingFaceEndpoint package

#from langchain_huggingface import HuggingFaceEndpoint

from langchain_community.llms import HuggingFaceEndpoint

from langchain import PromptTemplate, LLMChain

import os

repo_id="mistralai/Mistral-7B-Instruct-v0.3"

llm = HuggingFaceEndpoint(

repo_id=repo_id,

max_new_tokens=512,

top_k=10,

top_p=0.95,

typical_p=0.95,

temperature=0.01,

repetition_penalty=1.03,

huggingfacehub_api_token="hf_*****"

)

print(llm.invoke("write a poem on deep learning , for children age 10 "))

1 Like

Even I am facing the same issue:

1 Like

InferenceClient.post has been completely deprecated

The solution that worked for me is importing Inference client directly.The HuggingFaceEndpoint,HuggingFacePipeline doesnt seem to be working for the new version of huggingface_hub.

from huggingface_hub import InferenceClient

client = InferenceClient(

"mistralai/Mistral-7B-Instruct-v0.2", #"openai/gpt-oss-120b"

token=os.environ["HUGGINGFACEHUB_API_TOKEN"]

)

resp = client.chat.completions.create(

model="mistralai/Mistral-7B-Instruct-v0.2", #"openai/gpt-oss-120b"

messages=[

{"role": "user", "content": "What is the health insurance coverage?"}

],

max_tokens=1000,

temperature=0.1

)

print(resp.choices[0].message["content"])

1 Like