![]() I just published the first part of a tutorial series on the Muon Optimizer.

I just published the first part of a tutorial series on the Muon Optimizer.

Muon (Momentum Orthogonalized by Newton-Schulz) is quickly becoming the go-to optimizer for large-scale training. It’s already powering trillion-parameter frontier models like Kimi-2 (MuonClip) and was critical for the ATLAS paper, where first-order optimizers failed.

In this series, I’m breaking Muon down step by step: intuition, pseudocode, PyTorch implementation, and practical guidance on when/where to use it.

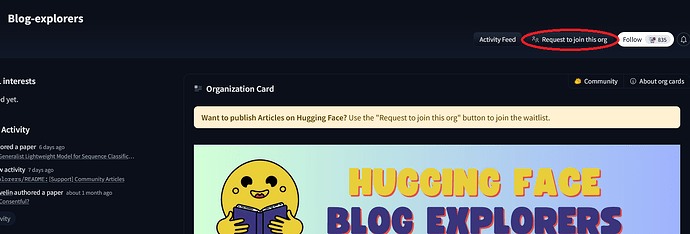

Also — I’d really like to contribute this as a guest article to the Hugging Face blog. I know the blog is managed by a group, but it looks like external contributors can’t directly join. If anyone here has advice or connections on how to submit contributions, I’d love to hear it ![]()

Muon deserves more attention in the open-source community, and I’d be excited to help bridge that gap.