When I subscribed to the PRO membership, it prompted that 10$ would be deducted, and then returned after “completion”.

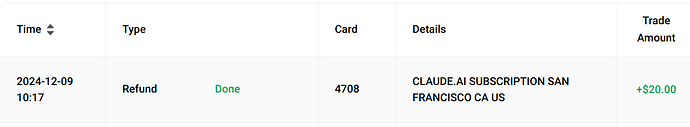

I was deducted 10$+9$, of which 9$ was the membership fee and 10$ was some kind of deposit, but now it has been shown as a member, and my 10$ has not been refunded.

I am a low-income person. When I was working, I only had a meager income of 22 dollars a day, working 8 hours+, and writing the management system backend in golang in a small company.

Later I resigned. I am particularly fond of deep learning, especially NLP and embedding. It is amazing that I can search for images by text using cosine similarity.

However, this cannot change my financial situation.

I plan to open a Space on huggingface, but I need a GPU because it is an llm modeled after chatgpt-o1, and the CPU probably cannot run it.

I tried to quantize, but failed. The gguf-my-repot Space of gguf prompted that the model was not supported. I can only run it with a GPU, so I had to recharge a PRO membership.

Then it happened, everything that happened at the beginning.

I am not begging for pity, I just want to say that I should have done nothing wrong. It seems that there is a bug in the system. I did not receive the 10$ deposit. For me, my financial situation is not optimistic. If I can earn 100$ a day, I will not care about these.

Please pay attention to this, I will follow up on this situation. Until I receive the deposit I should have received.